Test Settings

The test settings area allows you to configure various options for your test. It can be accessed by clicking the “Settings” button at the top of your test in the Ghost Inspector application.

Table of Contents

- Test Details

- Test Schedule

- Browser Access

- Browser Versions

- Step Timing

- Display Options

- Geolocation

- Data Sources

- Modularization

- External Links

- Miscellaneous

- Notifications

- Combining multiple browsers, screen sizes and geolocations

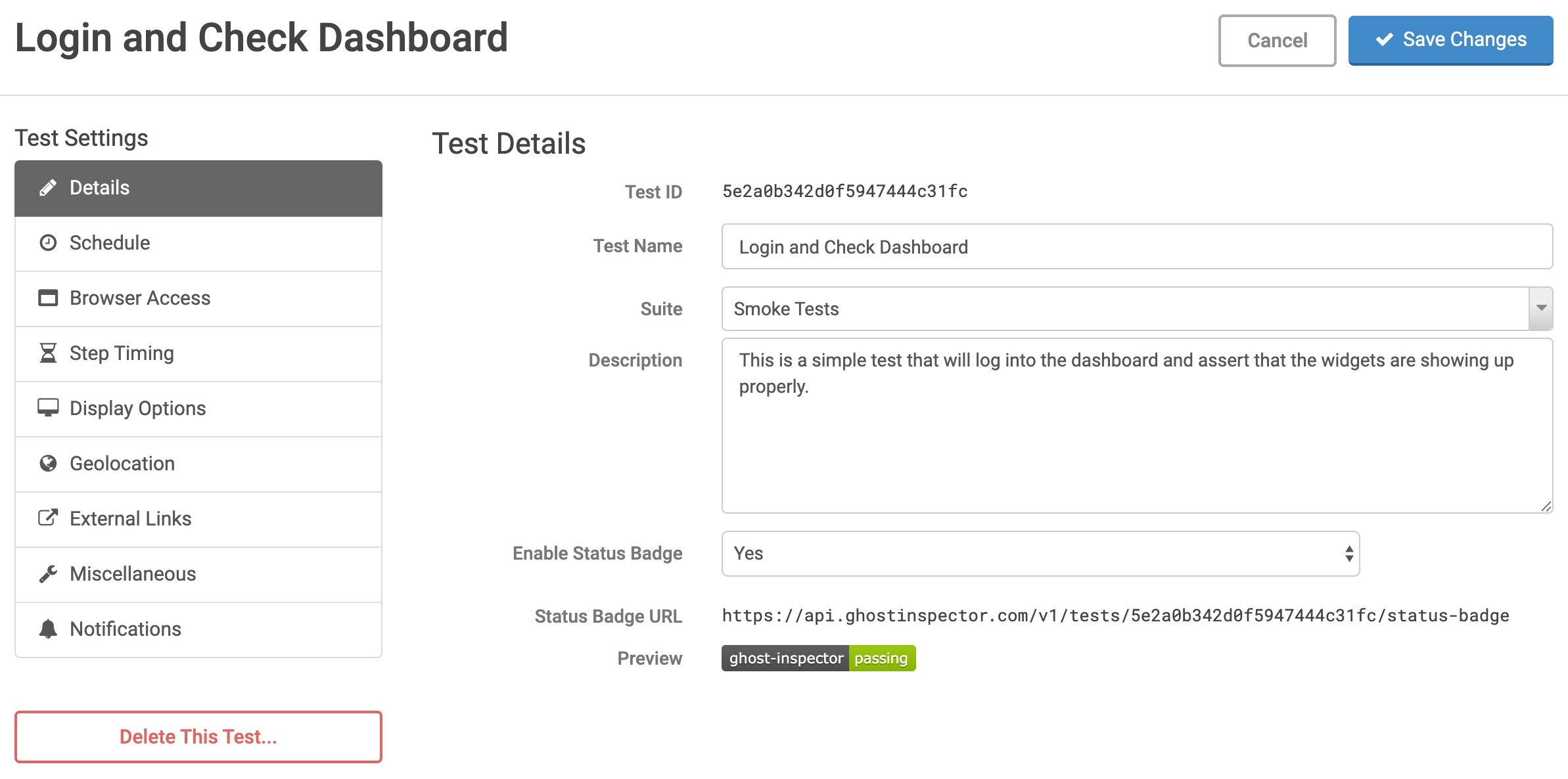

Test Details

In this section you can make changes to the basic information of your test.

- Test ID

- The ID of the test in our system. This ID is used when interacting with the test through our API.

- Test Name

- The name of the test.

- Suite

- The suite in which the test is located. All tests exists within a suite and inherit that suite’s settings.

- Description

- An optional extended description of the test.

- Enable Status Badge

- This setting enables the use of public status badges for this test.

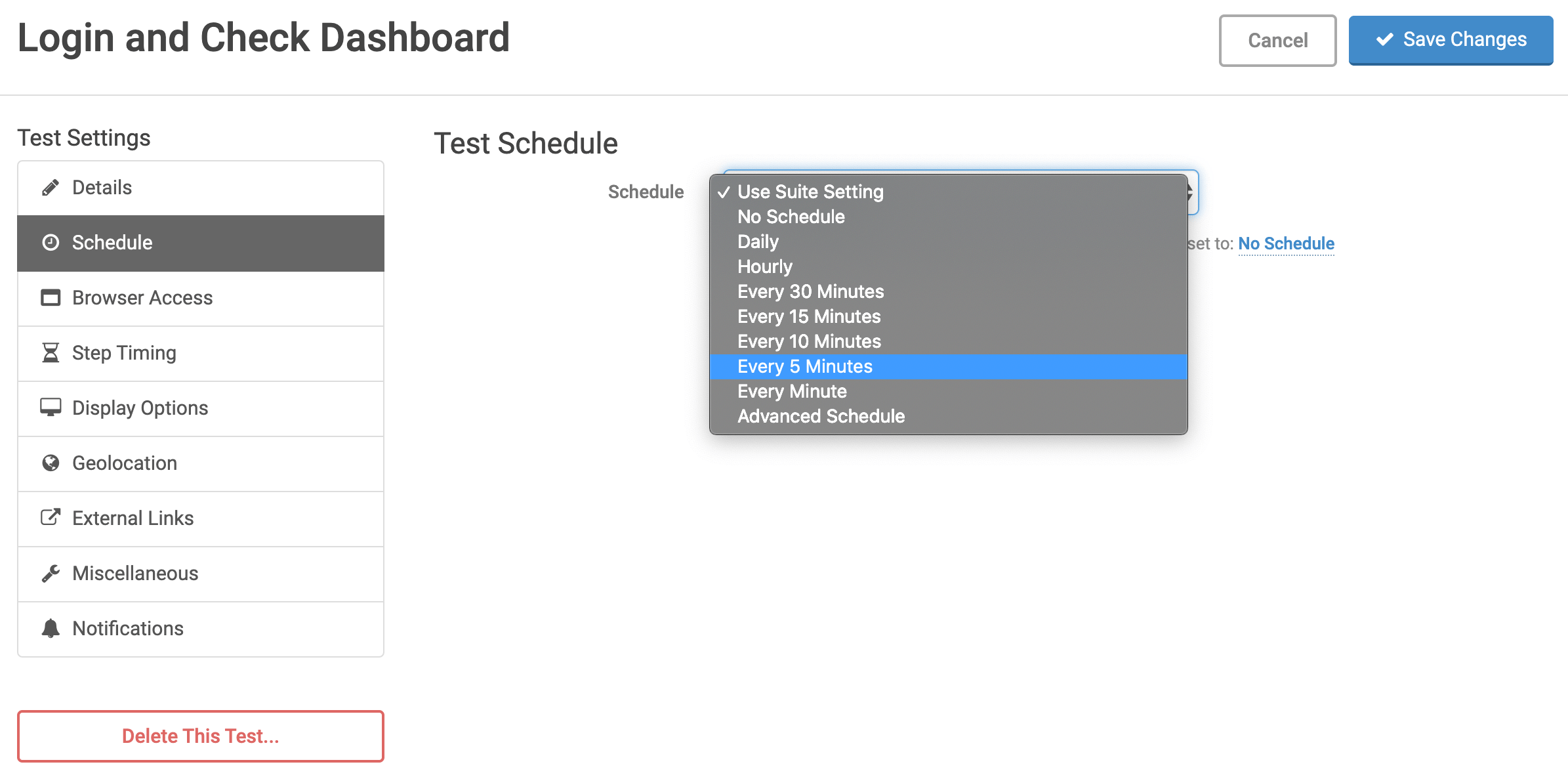

Test Schedule

Tests default to their suite’s schedule. You can leave this setting as is to run the test as part of the suite’s scheduled run, or select a more specific schedule. See our documentation on scheduling test runs for more details.

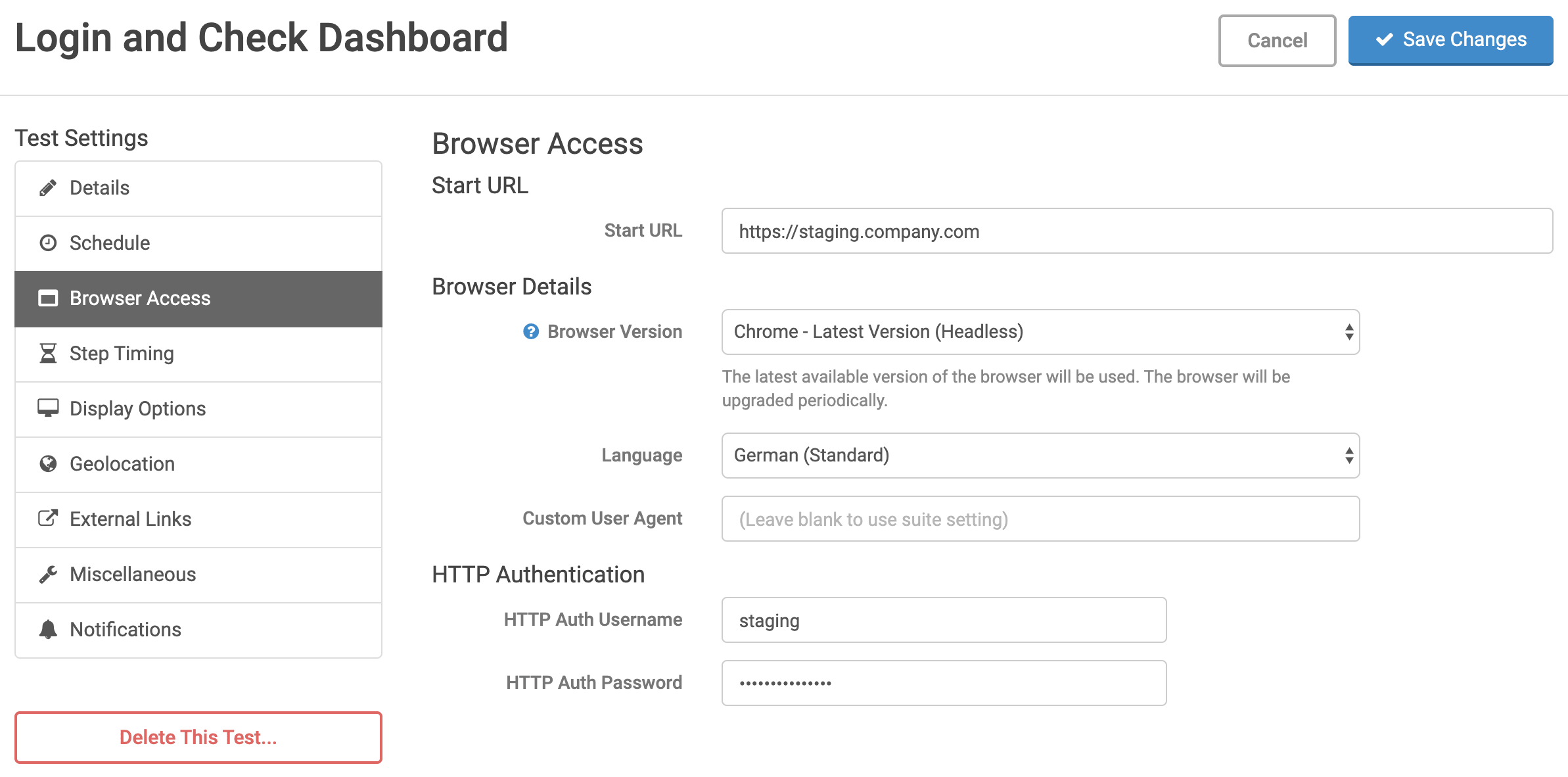

Browser Access

This section allows you to define various browser related configurations for the test. These settings can also be defined at the suite level and left blank here so that suite-wide changes can easily be made.

- Start URL

- The URL that the test will initially open and start on. This can also be managed in the test editor.

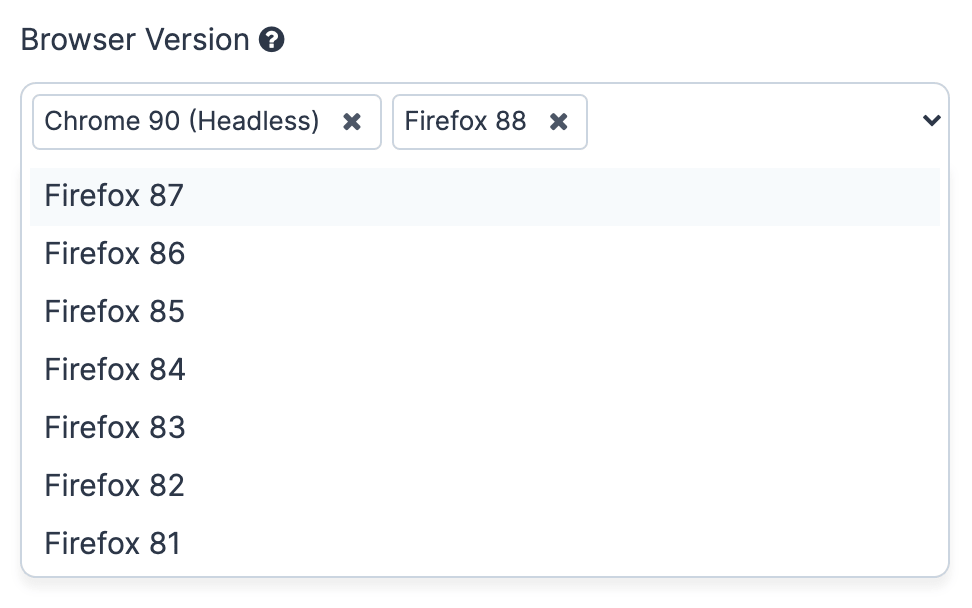

- Browser Version

- The web browser engine and version to be used when executing the test. Select more than one version to trigger multiple executions using different versions at the same time. More details…

- Language

- Web browsers define a language setting through the Accept-Language HTTP header. A specific language can be select, if desired.

- Custom User Agent

- Web browsers define a User-Agent to identify themselves. Ghost Inspector sets a default user agent string matching the test’s browser choice with “Ghost Inspector” concatenated onto the end of it. However, a custom user agent string can be specified, if desired.

- HTTP Authentication

- These fields can be used to specify a username and password if the website accessed by the test requires HTTP authentication.

Browser Versions

- Google Chrome

- We offer various versions of the Google Chrome browser in both “Headless” and “Traditional” modes. Headless mode is an official Chrome option designed for testing that runs the browser without the need for visually rendering it on our servers. It saves resources and allows the browser to run faster. We strongly recommend using Headless mode whenever possible. Traditional mode loads and renders the Chrome browser in a desktop environment on our servers and thus it is more resource intensive and slower. We only recommend Traditional mode if you have a specific test that interacts with viewport properties or other specific desktop related features. If you intend to open a PDF file during your test, then Chrome “Traditional” mode must be used.

- Mozilla Firefox

- We offer various versions of the Mozilla Firefox browser. Firefox v66+ is recommended. Firefox v60 and older are considered “Legacy” but offer a couple of non-standard features like network filters and the ability to customize HTTP headers. Unfortunately we’re unable to support these features in newer versions of Firefox and Chrome due to limitation of the new automation APIs. Unfortunately Firefox v60 and older are not able to access HTTPS websites using an invalid SSL certificate.

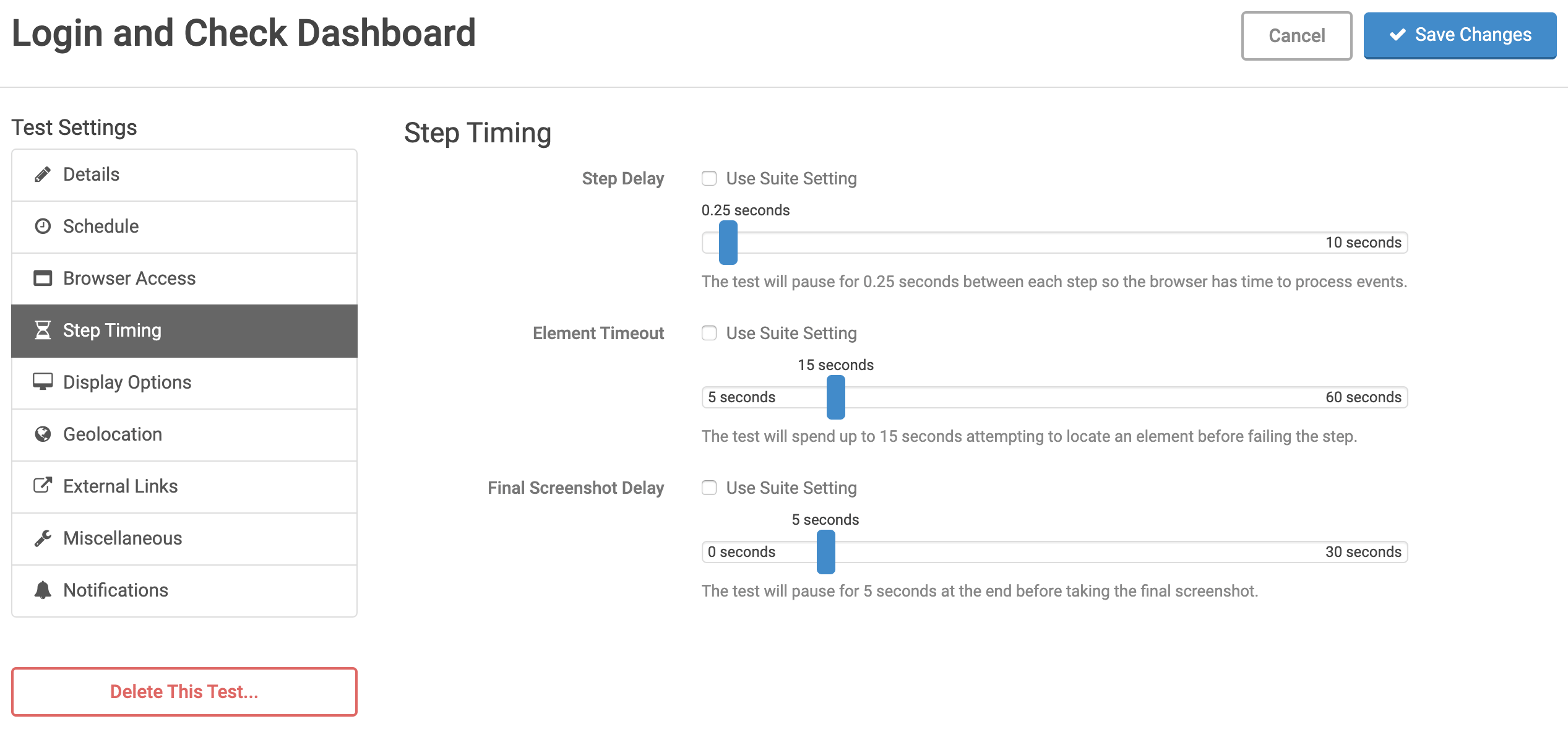

Step Timing

Here you can adjust the timing delays between various situtations in the test.

- Step Delay

- Step delay allows you to add up to a 10 seconds pause between each step. It is effectively adjusting the speed of your test. The default of 250 ms is intended to allow time for JavaScript events to complete when actions are carried out.

- Element Timeout

- Ghost Inspector automatically waits for the element in every step before performing that step. This is sometimes referred to as “implicit waiting” and is very helpful in ensuring that adequate time is provided for elements to appear. Element timeout is the maximum amount of time spent searching for an element before the step is considered a failure. The default is 15 seconds. However, in situations where elements may take longer than that to appear (such as a long delay while processing a payment), this setting can be extended to up to 60 seconds. Note that explicit “Pause” steps can always be used to delay longer.

- Final Screenshot Delay

- The Final Screenshot Delay is a delay at the end of the test before taking the screenshot. It’s helpful for ensuring that everything has loaded properly before the capture and comparison is performed.

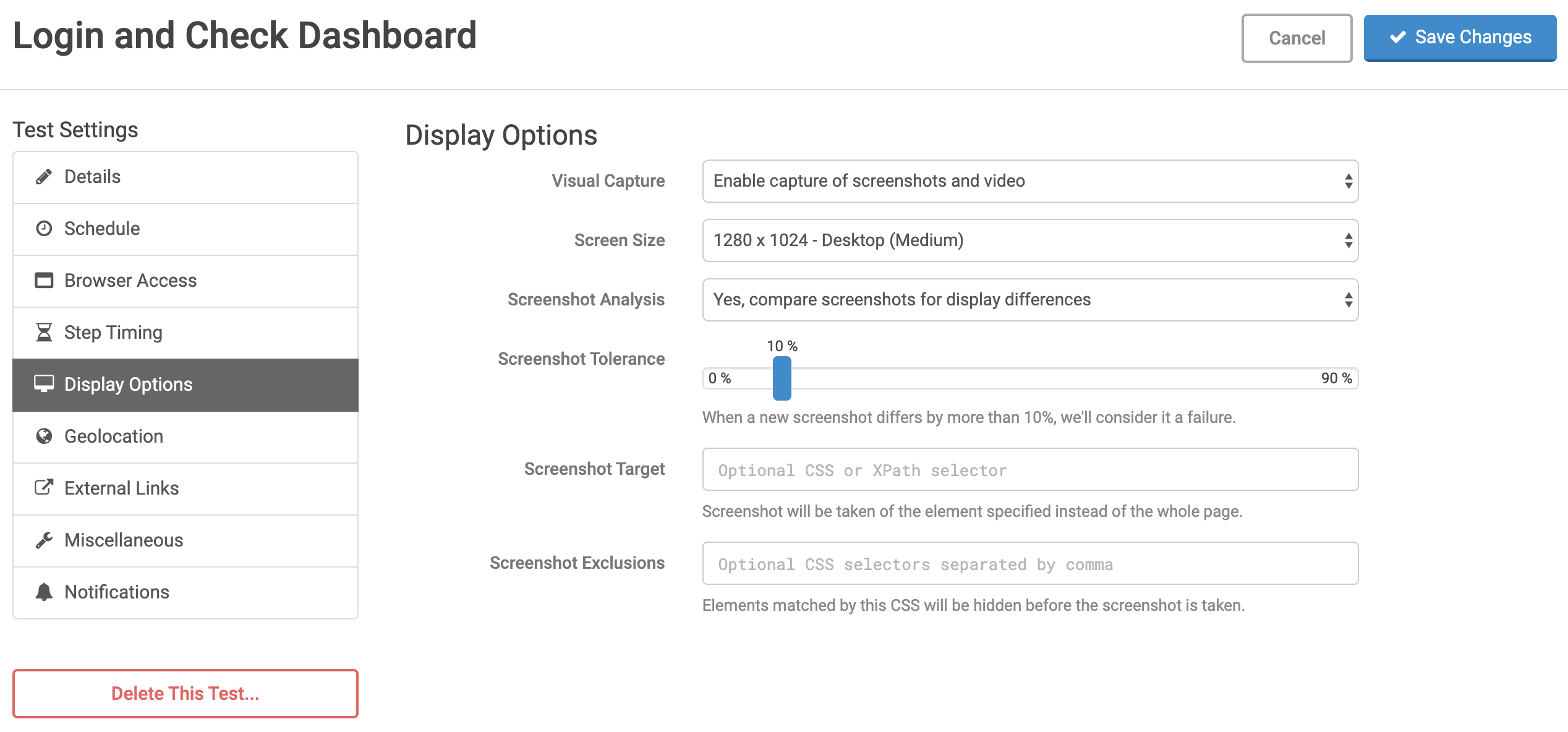

Display Options

In this section you can make changes to the display size and setting for the test, including options related to the Screenshot Comparison feature.

- Visual Capture

- Enable or disable the capturing of screenshots and video during the test run.

- Screen Size

- The screen size to be used for the test. Select more than one screen size to trigger multiple executions using different screen sizes at the same time.

- Screenshot Analysis

- Enable or disable screenshot comparison the feature.

- Screenshot Tolerance

- The acceptable amount of change (as a percentage of total pixels) allowed in the screenshot before a failure is triggered.

- Screenshot Target

- Specify an element (via CSS selector) to take the final screenshot of that element only, instead of the whole page.

- Screenshot Exclusions

- A comma-separated list of CSS selectors to hide before the final screenshot is taken. This setting allows dynamic elements (which may cause a screenshot failure) to be hidden. It’s helpful for removing elements which may differ on each page load such as advertisements, carousels and rotating images or text. The

visibilityof all matching elements is set tohidden, but layout is maintained.

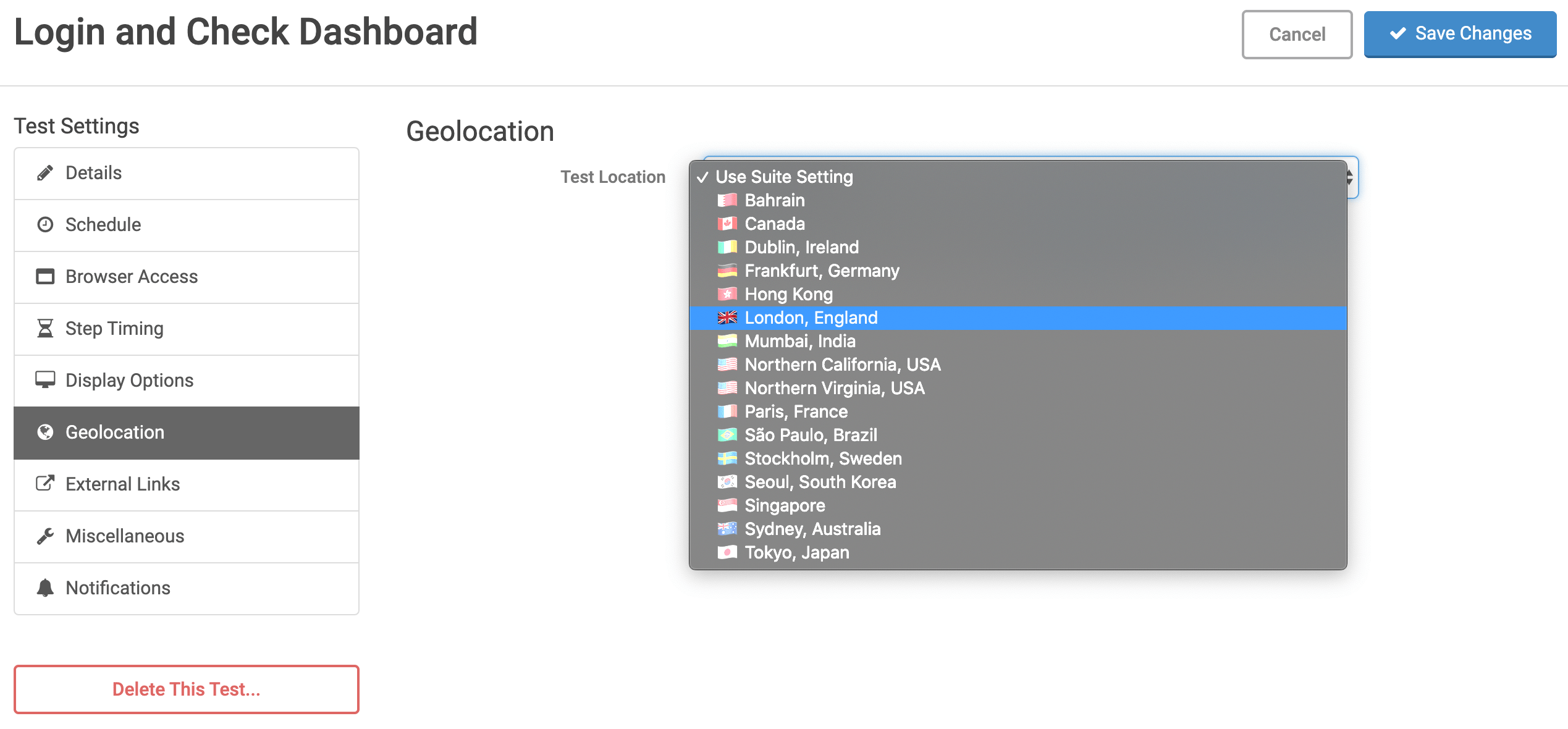

Geolocation

Ghost Inspector offers the ability to run your tests from specific regions around the world and will use an IP address from that region. By default, tests are run from the AWS Northern Virginia, USA region (us-east-1). You may also select more than one region to trigger multiple executions using different regions at the same time.

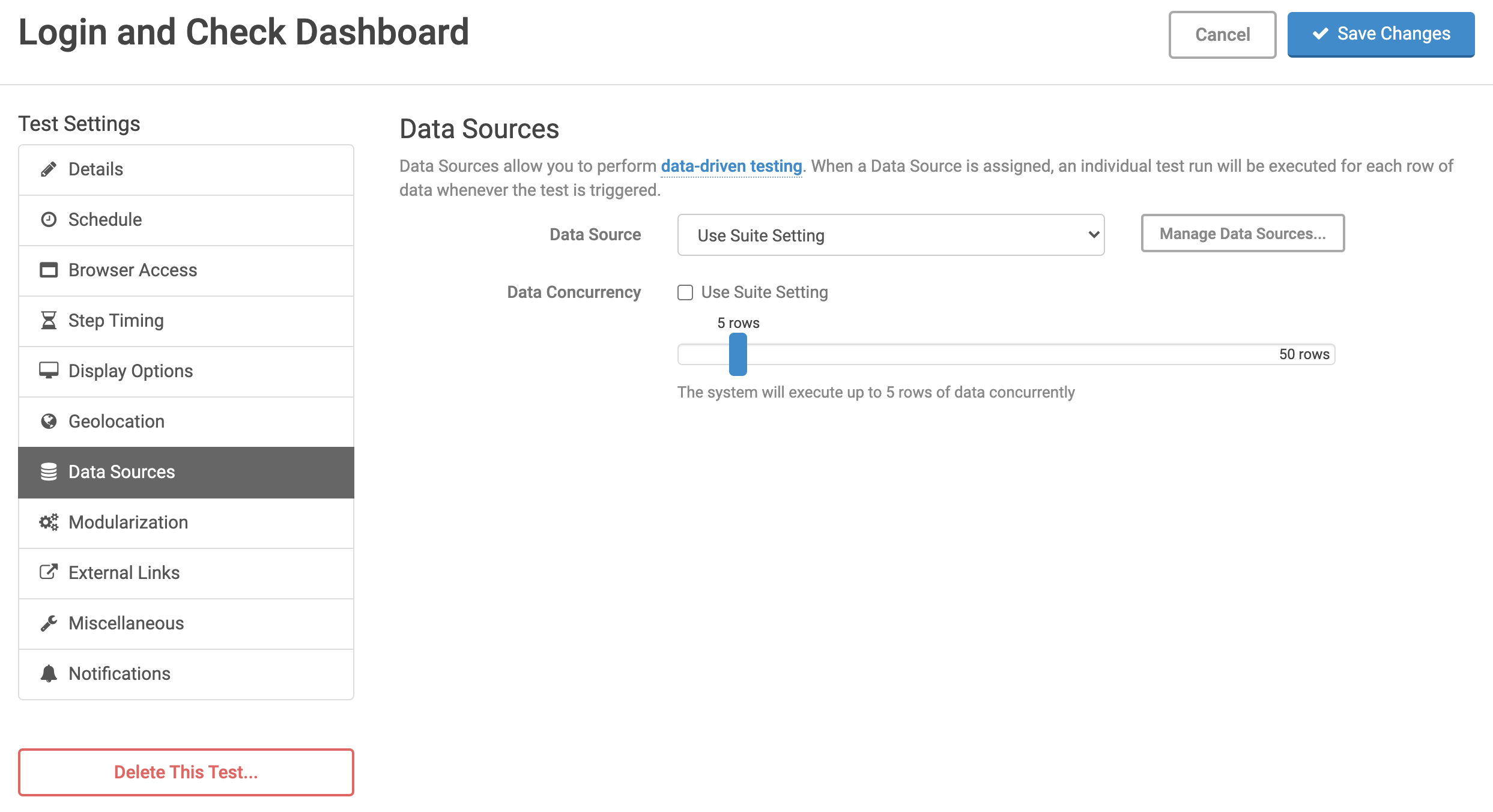

Data Sources

Data Sources allow you to perform data-driven testing. When a Data Source is assigned, an individual test run will be executed for each row of data whenever the test is triggered.

- Data Source

- Choose a Data Source from your Organization Settings. Once a Data Source is assigned, it will be used for all future executions, including via the application, the API, scheduled executions, and AWS CodePipeline executions.

- Data Concurrency

- The Data Concurrency setting allows you to specify the maximum number of data rows that will be executed at one time. This will apply to both one-time CSV executions as well as test runs triggered with a Data Source.

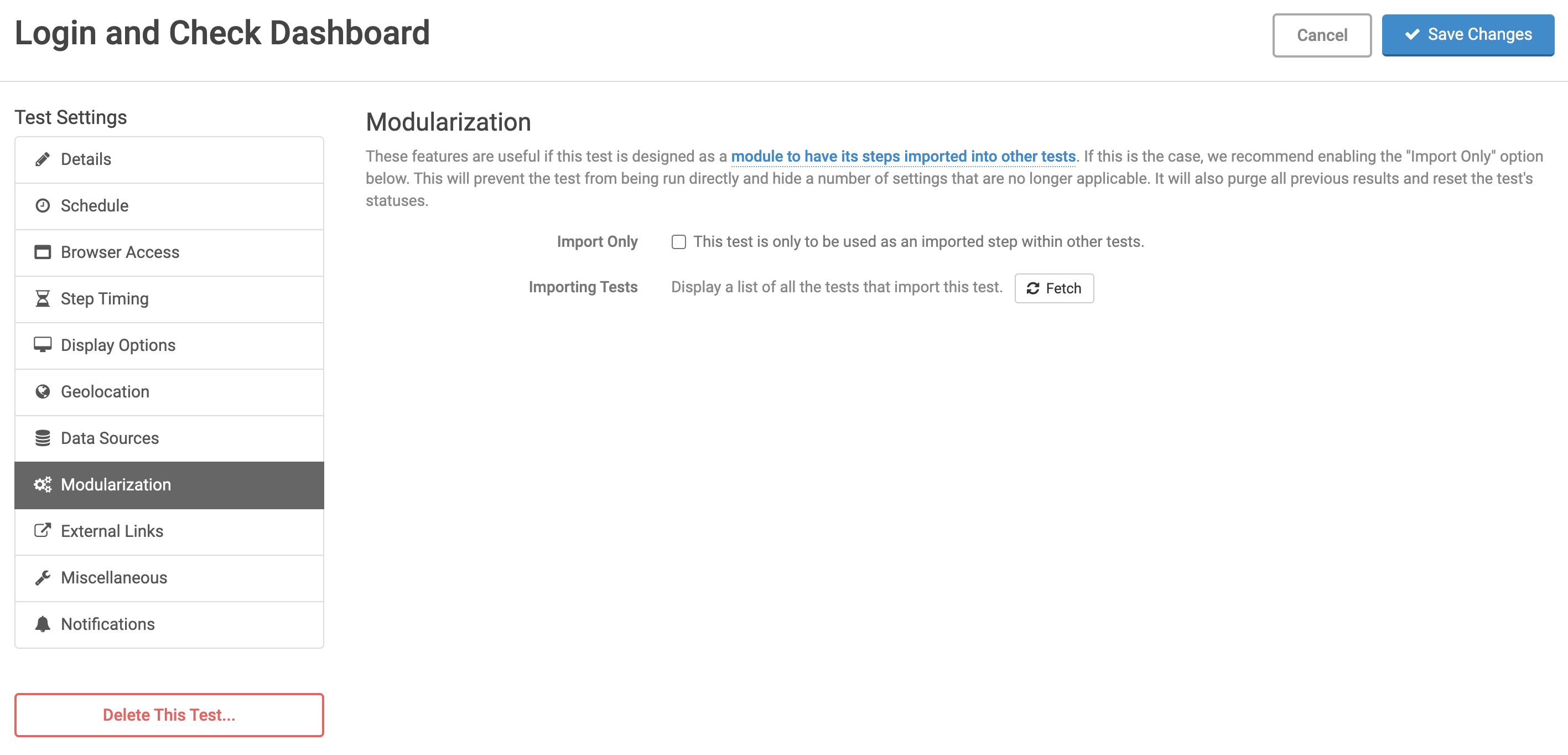

Modularization

These features are useful if your test is designed as a module to have its steps imported into other tests. Enabling the “Import Only” option will prevent the test from being run directly and hide a number of settings that are no longer applicable. It will also purge all previous results and reset the test’s statuses.

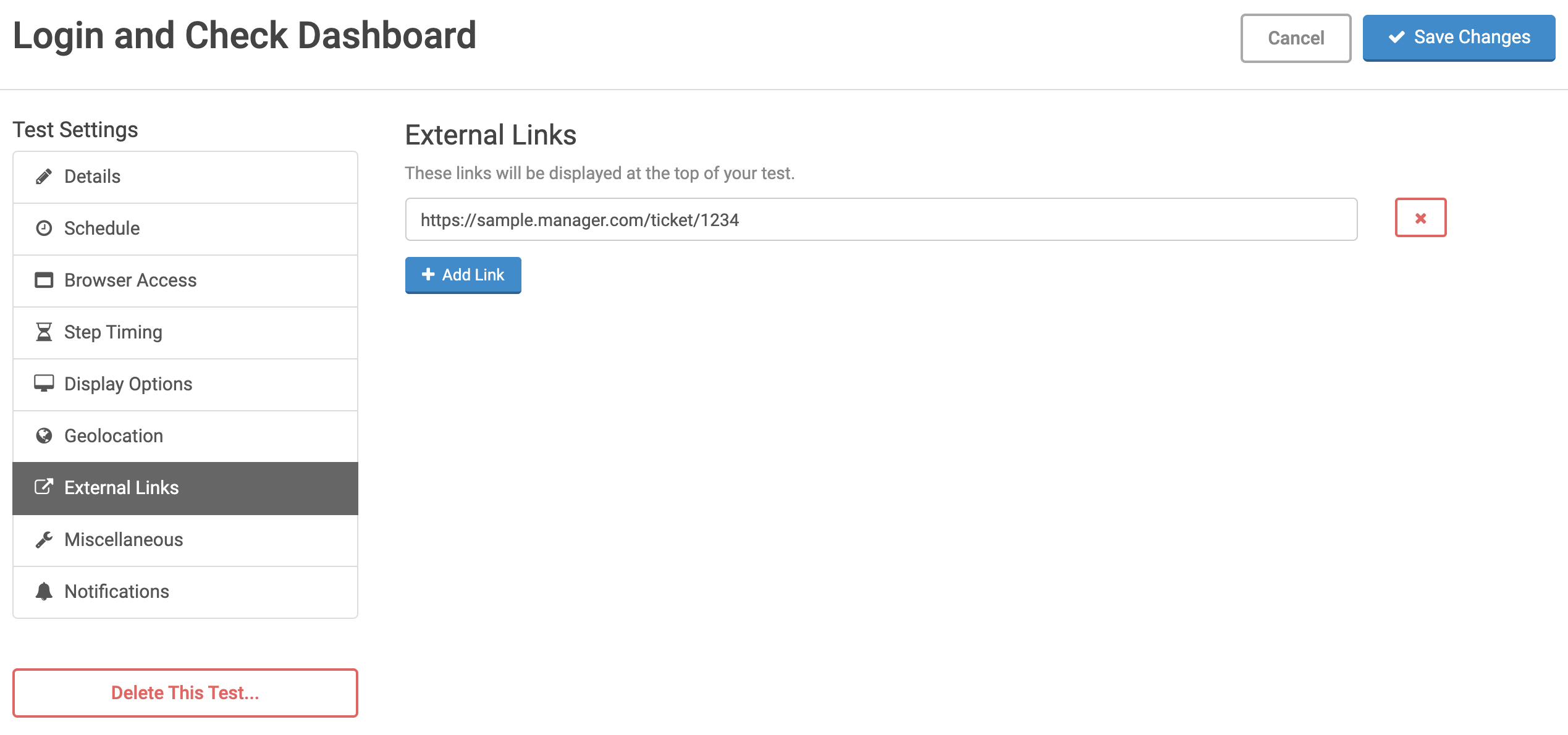

External Links

Ghost Inspector offers the ability to associate external links with your tests. For example: link a failing test to a Jira issue or a Zendesk support ticket. Anything with a permanent URL can be added in the test settings and will be displayed on the test, for all users.

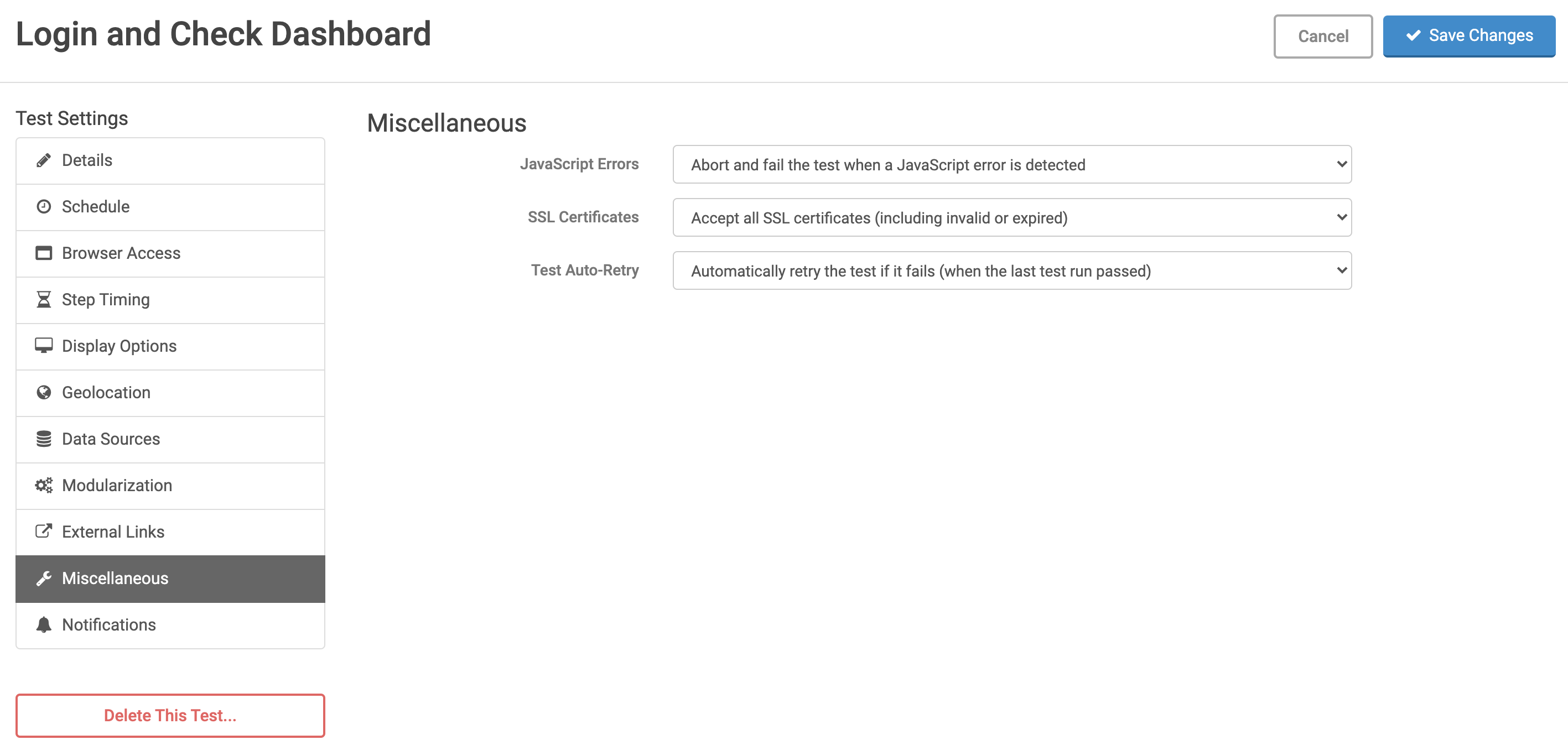

Miscellaneous

This section includes miscellaneous settings and options for the test.

- JavaScript Errors

- Ghost Inspector captures console output and includes it in test results in the “Console Output” section below the steps. We flag error output and color it red to differentiate it from standard logging out. By default, errors and uncaught JavaScript exceptions will not explicitely fail the test, assuming that the steps are still able to be carried out properly. However, this setting can be enabled to fail the test run immediately and exit when a JavaScript error is detected.

- SSL Certificates

- Ghost Inspector will accept all SSL certificates by default, even expires and invalid certificates (which are often used on staging servers). This setting can be enabled if you prefer that your test only accept valid SSL certificates.

- Test Auto-Retry

- Auto-Retry is a feature (enabled by default) that will re-run the test if a failure is encountered when the test was previously passing. In other words, if the test is passing, passing, passing — and then it fails — the system will immediately (and transparently) retry it before saving the result. This is enabled by default because it helps users combat false positives and fluke errors. However, in some situations (typically where “state” is involved in the test run), re-running the test can cause a problem. In those cases, this feature should be disabled.

When a test has been auto-retried the result will include a yellow “Auto-Retry” label in the header of the steps. Note, however, that we do not currently maintain the initial failure that triggered the retry. When the test fails and the retry is triggered, the first failed result is thrown out and replaced with the retry. For this reason, you will not see two results when a retry occurs — just the final attempted test run.

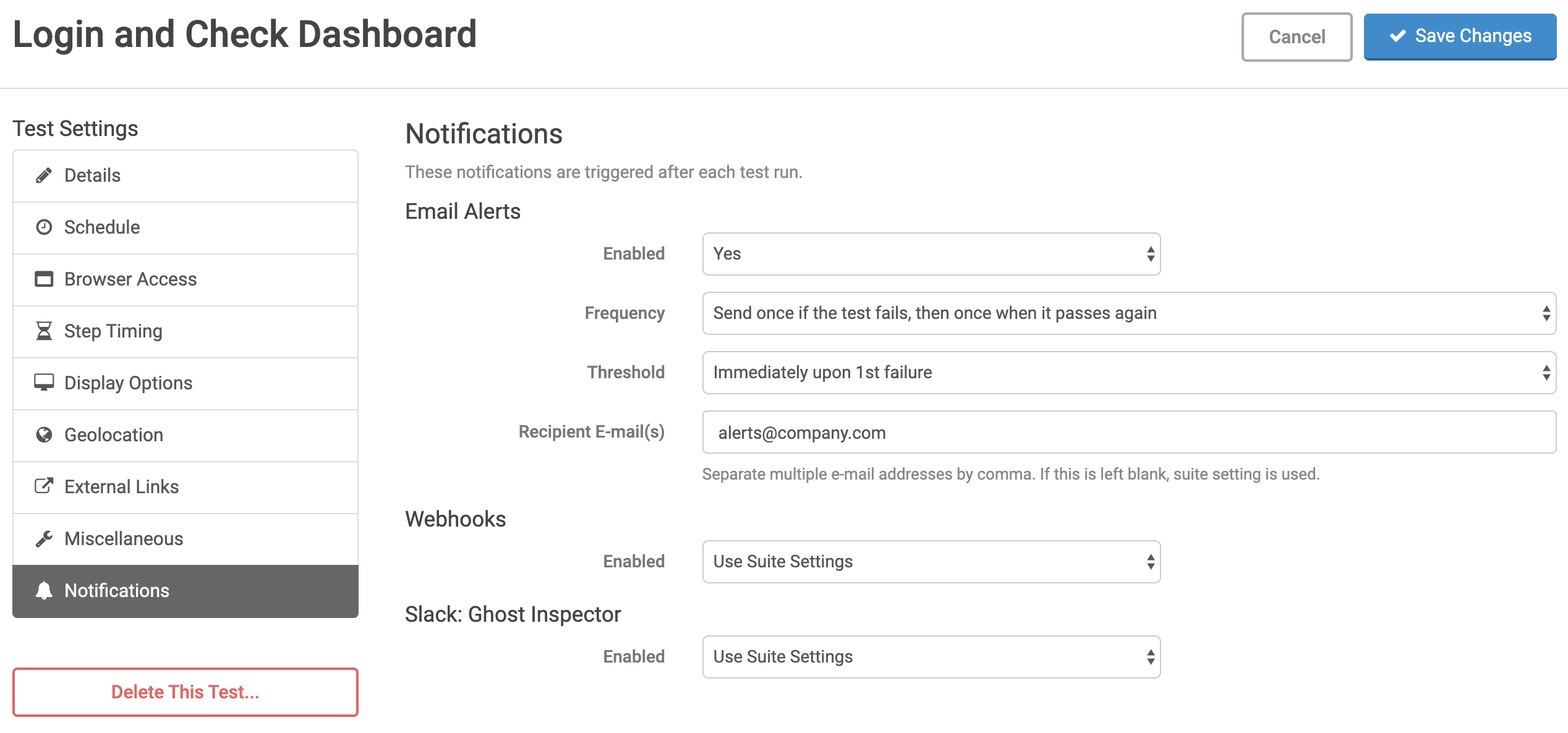

Notifications

Test notifications can be set at the organization, suite and test levels. They are inherited and can be overriden at each level. Options set here will be applied to this test only. You can use suite settings to apply them to the whole suite, or organization settings to apply them to your entire account. Various notification options are available including email, webhooks and more.

Combining multiple browsers, screen sizes and geolocations

You may wish to test your website using various browser versions, screen sizes, and geolocations. Ghost Inspector allows you to choose multiple options for these settings at the test or suite level.

The system will create and execute a result for every combination of your selections. For example, if you set the browser to Chrome (Latest) and Firefox (Latest), set the geolocation to Canada and Singapore, and set the screen size to 1024x768 and 800x600, you will end up with the following combination of 8 test runs:

- Chrome (Latest), Canada, 1024x768

- Chrome (Latest), Canada, 800x600

- Chrome (Latest), Singapore, 1024x768

- Chrome (Latest), Singapore, 800x600

- Firefox (Latest), Canada, 1024x768

- Firefox (Latest), Canada, 800x600

- Firefox (Latest), Singapore, 1024x768

- Firefox (Latest), Singapore, 800x600

If a data source is set on the test or suite or you are executing with a CSV, each row will also be multiplied by the chosen combinations. You can calculate how many test runs to expect by multiplying all combinations together:

×

Number of screen sizes

×

Number of geolocations

×

Number of data source rows

=

Total number of test runs

For example, if a test that has 2 browsers, 3 screen sizes, 2 geolocations and is executed with a CSV file that has 5 rows of data, you will end up with a total of 2 x 3 x 2 x 5 = 60 test runs.